Application Development

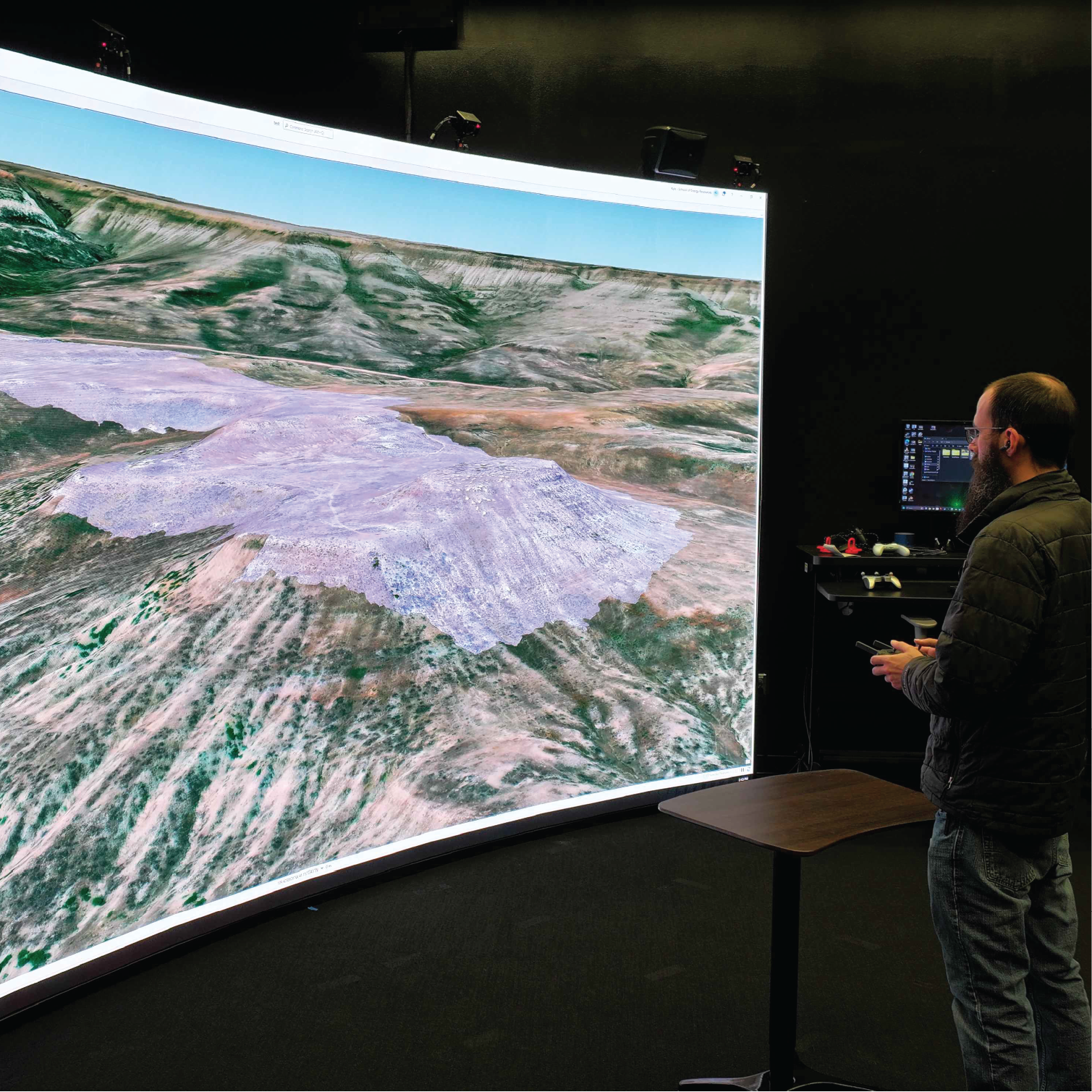

The 3D Visualization Center houses a powerful 16 foot by 9 foot direct view LED display capable of stereo 3D rendering. Using this technology, the 3D Visualization Center is capable of showcasing complex concepts and data in 3D to large groups of people at a time and with as little effort for viewers as putting ona pair of glasses.

This cutting-edge display, installed in 2024 in collaboration with the School of Computing, measures approximately 16’x9’ and features an extremely high refresh rate, positional tracking, and stereoscopic 3D. Several of our most common software suites are supported in stereo 3D, including ArcGIS Pro, Visual Molecular Dynamics, ParaView, Blender, and Unity applications. Additionally, this display is used to display SAGE3 boards, facilitating collaboration with other computing centers around campus.

The Visualization Center supports and develops software for all currently-available virtual reality headsets, ranging from standalone devices like Meta Quest series to dedicated PC-based solutions like the Valve Index, HTC Vive, and other high-end tethered or wireless devices.

Augmented Reality generally features transparent displays or camera-based visual passthrough, allowing users to remain firmly rooted in the real world while interacting with virtual data. These platforms are ideal for collaborative work, field training, and instructional tasks. Depending on the platform, they may be able to track and recall room layouts and objects, allowing for location and item-relevant data to be summoned as appropriate.

The Visualization Center develops and maintains software across most common platforms, including all major desktop operating systems (Windows, MacOS, and Linux), mobile platforms (Android and iOS) and various web-based environments.

Drone-based remote sensing

In the fall of 2023, the 3D Visualization Center began developing a drone-based remote sensing program. This initiative involved certifying team members under FAA Part 107 to operate UAVs legally and safely, as well as acquiring two drone platforms along with a suite of sensor payloads. The overarching goal was to build in-house capacity for both academic research and applied geospatial data collection.

In 2025, the Center significantly expanded its sensing capabilities by acquiring the HySpex Mjolnir VS-620 ,a state-of-the-art hyperspectral imaging spectrometer. This dual-sensor system captures 200 spectral bands at 3.0 nm resolution in the visible to near-infrared range (400–1000 nm), and 300 spectral bands at5.1 nm resolution in the short-wave infrared range (900–2500 nm). The system also includes integrated LiDAR, allowing for topographic mapping and precise geospatial alignment. Implemented primarily for mineral exploration, the Mjolnir will support critical mineral mapping and geological characterization across a variety of terrains.

To support high-resolution photogrammetry, the team also acquired a SonyILX-LR1 , a 61-megapixel full-frame DSLR, paired with a 24mm F2.8 G wide-angle prime lens. This configuration enables the creation of centimeter-scale orthomosaics and digital surface models with exceptional accuracy and detail.

Amid growing national security concerns surrounding Chinese-manufactured drones, particularly those produced by DJI, the team made the strategic decision to pursue an NDAA-compliant UAV, ensuring compatibility with federally funded projects. In the summer of 2024, the team procured the Harris Aerial Carrier Hx8 , an electric coaxial octocopter engineered for heavy-lift applications. Constructed from carbon fiber for strength and durability, the Hx8 offers motor redundancy, enabling continued flight even if two rotors fail. It has a payload capacity of approximately 8 kilograms, with flight times varying based on payload weight and elevation.

Geospatial Information Systems (GIS)

The 3D Visualization Center has established a sophisticated GIS server architecture comprised of private- and public-facing components. Servers behind the University of Wyoming's firewall are dedicated to hosting proprietary or preliminary datasets and support internal development efforts. Servers positioned outside the firewall function as a public-facing GIS hub for datasets, web services, and web applications and host internal and external projects.

The 3D Visualization Center develops relational databases within an enterprise ArcGIS Spatial Database Engine (SDE) platform, utilizing PostgreSQL. SDE provides an efficient, flexible framework for managing large spatially enhanced datasets that integrate seamlessly with ArcGIS applications and tools. Our databases are strategically engineered to enhance and extend established database schemas commonly employed by state and federal entities, such as the U.S. Geological Survey’s Geologic MapSchema (GeMS). This approach ensures compatibility with regional and national data standards and facilitates seamless data integration and exchange across multiple platforms and institutions.

Datasets within the database are also shared as web services—live data streams that provide access to current information without needing to continually download or store large files locally. These webservices are hosted within SER’s REST directory, an online catalog of geospatial resources accessible viastandard URLs.

Current projects include the design and implementation of specialized database schemas to support DOE-funded initiatives, such as Class VI permits for carbon sequestration and Carbon Ore, Rare Earth, and Critical Minerals (CORE-CM) projects. These schemas are being developed to support complex geospatial, geologic, and regulatory datasets, enabling scalable and efficient data management and analysis.

The 3D Visualization Center is committed to developing GIS web applications within the ESRI framework, recognizing that ESRI's products and tools effectively complement GIS systems used by moststate, federal, and private organizations.

The primary web application development platform used by the 3D Visualization Center is ArcGIS Experience Builder. Interfaces designed with Experience Builder are interactive, user-friendly across all levels of GIS proficiency, and require no additional software purchase or installation. Users simply access a web link to the web application, where datasets can be viewed, analyzed, and downloaded. Behind the scenes, Experience Builder applications connect to and communicate in real time with SER’s PostgreSQLSDE and image servers, which are storage warehouses for geospatial data, associated attributes, and georeferenced map images. SER also maintains a dedicated web server that houses non-spatial documents accessible via URLs embedded within Experience Builder applications.

The 3D Visualization Center takes pride in producing high-quality cartographic products, leveraging ArcGIS Pro’s data management and spatial analysis tools to deliver products that are both visually compelling and analytically robust. Whether for print or interactive applications, our cartographers adhere to GIS best practices to ensure clarity, accuracy, and usability. From detailed maps and thematic layers to immersive 3D visualizations, our ArcGIS-based cartography empowers decision-making, enhances communication, and clearly conveys complex geospatial information to diverse audiences.

Digital Asset Creation

Digital assets are used throughout every 3D Visualization Center project to visualize a wide array of objects, places, data, and ideas. There are numerous approaches that are taken to achieve successful results to an endless amount of possibilities. This enhances communication of topics and ideas through various visual mediums.

One of the core components of many applications developed by the 3D Visualization Center is 3D modeling. A vast variety of objects and environments are created through this process for use across applications, video, figures, and diagrams. There is a high degree of flexibility within this technique which allows for asset creation that can fit extremely detailed specifications or loosely defined guidelines. Software that is utilized in asset creation includes Blender for free form modeling, and Autodesk Fusion 360 and Revit for precise architectural and engineering-based modeling. Models are designed to be compatible with specific environments in mind such as game engines, animation, and 3D printing.

Models created by the team go through multiple stages of development before they reach the final product. To bring a model from the pre-visualization stage to the final rendering stage, advanced materials are created to bring it to life. The Adobe 3D Substance suite is used to achieve the desired look for the object which can range from highly detailed, realistic look to a soft, stylized appearance. The materials developed are capable of holding visual data of the object being replicated such as color, reflectivity, metallic properties, and transparency, which allow for accurate recreation of real world objects.

Animation is a medium that is useful for translating complex concepts into an easily digestible format, aiding in education and outreach. The team is capable of creating both 2D and 3D animation to illustrate necessary concepts or develop eye catching motion graphics. The Center uses a suite of powerful computational resources to render high-quality animation which also allows for longer more advanced animation. Animated models are integrated throughout most of the application development projects to build an immersive experience that engages with the end user.

Education & Outreach

The 3D Visualization Center supports the local and educational communities in a variety of ways, sharing their tools and experience to help educate people on the power and utility of 3D visualization and extended reality technology.

The 3D Visualization Center creates programs for a wide range of applications with the goal of informing the public, teaching a new concept, or finding a way to portray data in a way that the scientists behind the research can better understand. To that end, the 3D Visualization Center builds programs for whatever usecase benefits the tasks best. Whether that's the immersivity of virtual reality, the collaborative capabilities of augmented reality, the mobility of a tablet or phone, or in house visualization tools for desktop.

Not all who wander are lost! Sometimes, they are just on a field trip. The 3D Visualization Center supports taking hardware on the road, such as tablets and mobile work stations for data capture or outreach, as well as standalone virtual reality headsets to bring immersive experiences to other departments on campus, or even across the country.

The 3D Visualization Center is dedicated to empowering students of all ages and fostering the curiosity for building 3D applications. In the past, the team has supported the Computer Science Research Experience for Undergraduates program, hosted by the School of Computing, in teaching weekly lessons on software development, 3D modeling, and deployment to target devices.

Ever focused on supporting the community, the 3D Visualization Center has long offered tours of their facility to the public, free of charge, to showcase the technology, capabilities, and applications of their work. Tours include showcasing head-tracked stereoscopic 3D applications on the new direct-view LED display and the now-retired 3D CAVE, demonstrating workforce development applications and reconstructed environments in PC-driven VR, collaborative chemistry lessons in augmented reality, and several client projects deployed to mobile devices.

One of the many pride and joys of the 3D Visualization Center is their internship program. Here, students from a wide range of disciplines get hands on education and experience on how to do everything the 3D Visualization Center has to offer. From 3D modeling, to data capture, to software development, the internship program equips students with the skills they will need to be able to excel in their industry of choice, as well as giving them opportunities to apply those skills in the field. Additionally, the internship program teaches students how to incorporate the education they have already received into these new workflows in order to bring their education full circle.

Data Capture

360 Photography captures an environment using a specialized device outfitted with multiple cameras mounted on a spherical chassis. The 3D Visualization Center houses two such devices with still frame resolution up to 16k, featuring stereoscopically separated captures for use in immersive head-mounted displays. These devices allow a user to capture photographs in all directions of the environment, which are stitched together as an equirectangular surface and can be projected or mapped onto a ‘skybox’ that wraps the image around a spherical surface. 360 photography and video can be used in virtual tours, education, training, outreach, marketing and advertising, as well as gaming and entertainment.

Photogrammetry involves using multiple photographs of a single object in the real world to create a digital representation in three dimensions. These photographs are aligned using structure from motion algorithms to recreate their relative position and orientation in space. This technique allows for reasonably accurate, textured meshes to be created using traditional cameras, phones, or drone-based imagery.

Gaussian Splatting is a volumetric rendering technique that uses ellipsoids to represent the environment or scene, rather than traditional polygons or meshes. Combined with spherical harmonics, which provide directionally informed color changes, this approach can simulate transparency, reflectivity, and lighting as captured. This approach has some distinct advantages over traditional photogrammetry, which struggles with reflective, transparent, or thin elements, but is not nearly as versatile from a rendering perspective.

One of the tools the 3D Visualization Center has on hand is the Artec Leo wireless structured light scanner. By projecting light patterns and measuring their deformations over an object’s surface, we can capture its three-dimensional shape. Using this device, the 3D Visualization Center can create more accurate and to-scale digital models of objects either in house or in the field. By using non-coherent light sources such as LED’s or projectors, this approach eliminates potential safety concerns associated with laser capture .

Originally a portmanteau of the words "Laser" and "Radar", the acronym, LIDAR, now stands for "Laser Imaging, Detection and Ranging". This method of data capture measures the surface of an object by casting a laser against it and measuring the time it takes for the laser to return to the device. This process produces highly accurate and precise 3D mapping of models and environments, but can be limited by cost, weather conditions, limited penetration through dense forest or vegetation, and high data processing overhead. This method is widely used across industries including surveying, archaeology, agriculture, engineering, transportation and the mapping of digital surface and elevation models.