How We Can Help:

Data Management Planning

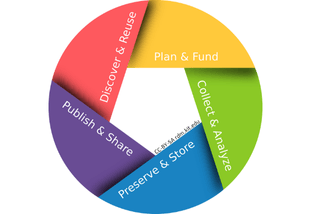

The Libraries provide guidance for writing Data Management (and Sharing) Plans (DMPs or DMSPs), whether they are required as part of a grant proposal or written electively to support effective practices through the full data lifecycle. We facilitate access to DMP Tool for funder-specific planning prompts and considerations, and can also help you locate sample DMPs in your discipline and provide feedback on draft DMPs.

DMP requirements vary by funder and discipline of research. You should always check grant and funder guidelines and adhere to any specific requirements they communicate.

Need help with other parts of your grant proposal? Reach out to the Research Development Office (RDO) in the Research and Economic Development Division (REDD) or browse their Proposal Tool Kit.

Data Curation & Sharing

The Wyoming Data Repository (WDR) supports University of Wyoming and Wyoming Community College researchers in sharing their research data. Every data record in WDR receives a DOI (digital object identifier), helping you meet publisher and funder data sharing requirements while also making your data easier to find, reuse, and cite. Depositors also receive data curation support from the Libraries. WDR is an open access repository operated by UW Libraries in collaboration with the Advanced Research Computing Center (ARCC).

Request an account: visit the WDR homepage and click ‘Sign Up’.

Data Documentation

Data documentation takes many forms, including READMEs, metadata, codebooks, data dictionaries, and DMPs. UW Libraries provides guidance on data documentation as an extension of our support for data curation and sharing.

READMEs

A README provides information about a data file and is intended to help ensure that the data can be interpreted accurately. READMEs can help:

- Explain directory structures and file naming conventions, including any abbreviations used

- Document changes to files or file names within a folder

- Document data processing and cleaning methods

- Provide comprehensive metadata, including:

- project and contributor information

- funder information

- data collection and analysis methods

- instruments, software, and hardware utilized

- related publications or other research outputs

All WDR deposits must be accompanied by a README formatted as a .txt or .md file.

Want help with your README or other data documentation? Reach out to the Data Services Librarian, or try these resources:

- WDR ReadMe Template

- Guide to writing "readme" style metadata (Cornell University)

- Disciplinary metadata guide (Digital Curation Centre)

- Open directory of metadata standards (Community-driven project, via Research Data Alliance)

Metadata

Metadata is structured information that describes key aspects of data or another digital

object. It is expressed through elements defined by a metadata schema and corresponding

values that follow formatting and vocabulary standards.

In data repositories, metadata facilitates data search, retrieval, reuse and citation.

Metadata can also be harvested for broader indexing and discoverability.

FAIR Data

FAIR stands for Findable, Accessible, Interoperable and Reusable. The FAIR Data Principles were developed and endorsed by researchers, publishers, funding agencies and industry partners in 2016 and are designed to enhance the value of all digital resources. The FAIR principles do not prescribe any particular technology, standard, or specification, but rather act as a guide to researchers to aid them in evaluating whether their current data curation practices are sufficient to render their data Findable, Accessible, Interoperable, and Reusable.

FAIR Resources:

- What is FAIR? (How to FAIR)

- The FAIR Principles (The Turing Way)

- How FAIR are your data? (EUDAT)

Further Data Management Resources

- SPARC

Browse data sharing policies of U.S. government agencies. SPARC is a global organization that promotes open access, open data, and open education. - Funder Guidelines (from DMPTool)

The DMPTool website provides links to requirements for Data Management Plans (DMP) of funding agencies and organizations on which the DMP templates are based. Links to funding proposal guidelines are included. (Note: Smaller agencies may not be listed here. Check agency websites for funder policies and guidelines.)

- National Science Foundation (NSF) Data Sharing Policies

NSF Directorates and Divisions have discipline-specific guidelines to supplement the NSF agency-wide policies on data sharing and Data Management Plan requirements.

- NIH Data Sharing Policy

The National Institutes of Health's current policy statement on data sharing and additional information on the implementation of this policy. There is also links to information about the new, more stringent policy that takes effect in 2023.

- NIH Institute & Center Data Sharing Policies

Data sharing is a priority across NIH. To this end, many institutes, centers, and research programs have instituted specific data sharing policies in addition to the trans-NIH policies. These policies are listed in the table below. Note that individual funding opportunities may specify other requirements or expectations, so be sure to read all instructions carefully.

- re3data.org

The Registry of Research Data Repositories provides a portal to locate suitable places to deposit digital research data for public accessibility and to locate data sets for reuse. Search for repositories by subject, content type, or country.

- Figshare Figshare promotes open sharing of research outputs including data files up to 20 GB. Search, browse or upload data files.

- Zenodo

A data repository that accepts research outputs from across all disciplines and assigns a doi (digital object identifier) during upload.

- GitHub

A software repository. Some services may require a subscription fee.

- Dryad

A non-profit repository that integrates data curation with manuscript submission. Primarily focused on biological sciences. Many publishers are member organizations.

- NIH Data Sharing Repositories

Listing of data repositories supported by the National Institutes of Health or approved for use by grantees.

- Data.gov

Search for research data sets published by government agencies.

- Harvard DataVerse

Repository open to all scientific data from all disciplines worldwide. Advanced search and filtering functions.

- DOE Data Explorer

Locate datasets from the U.S. Department of Energy.

- IEEEDataPort

Free upload and access of any research dataset up to 2TB from IEEE, a professional organization advancing engineering, computing, and technology information.

- ICPSR

The Inter-university Consortium for Political and Social Research, managed by the University of Michigan, is a free data repository for research outputs in the social sciences.

- OAD Data Repositories

Listing of open data repositories by discipline with links to websites. Derived from the Open Access Directory. Open data means it is accessible and freely re-usable without additional permission.

If you're looking for a general introduction on best practices for research data management, check out some of these training modules. Digital Scholarship can also provide local, customized training.

- RDM Handbook (OpenAIRE)

- The Research Data Management Workbook (by Kristin Briney, 2023, free online version shared under CC BY-NC)

- Data Management in Large-Scale Education Research (by Crystal Lewis, 2024, free online version shared under CC BY-NC-SA)

- Intro to Data Management (UW - ARCC)

- Data Management Training (DataOne)